Some of our favourite apps would not be on the market without the support of the various intricate sensors that are found in smartphone devices.

Sensors help save battery life, detect phone rotation and help keep your phone secure with features like thumb and face ID.

Regardless of your background, you may have some thoughts about how to improve these sensors for accuracy and efficiency, how to address a process gap, or how to help different information regimes communicate with one another.

Whether you’re a healthcare professional with a concept for a mobile product, a developer of mHealth apps or someone who’s interested in seeing how their app can leverage smartphones’ full capabilities, you are familiar with the magnitude of impact that smartphone sensors have had on health and wellness apps.

This piece is the first in a series intended to outline data decision points for customized mobile app development.

This post in particular focuses on the capabilities and uses of inbuilt smartphone sensors and will help answer questions like:

“Which information is needed for the purpose of the app?”

“How could data be filtered, recombined, transformed to provide actionable, useful services?”

“Is iOS or Android a better choice for the intended functionality, or do the requirements allow for a platform-independent development?”

Let’s jump in!

What are smartphone sensors? What do they do? How are they used?

Smartphone sensors are built-in devices whose purpose is to detect events or changes in its environment, and then convert them into signals which can be read as data by applications, and interpreted to specific uses.

The embedded sensors in smartphones affect the native device by sending data to the phone’s computer, but may also provide information that can recombine with other information systems and technologies (wearable consumer devices/medical devices, health records, APIs, aggregators, predictive algorithms, and/or AI technologies).

Systems of smart sensors provide an unprecedented density of data and interconnectivity, from the phone itself, to external devices, to various kinds of processing centres. Together, they can capture, store, manage, transmit, interpret, and act on large volumes of user data.

Continual passive sensing happens without any extra effort on the user’s part. Sensors on smartphones translate the external world into digital information, first by responding to physical stimuli and then transmitting a resulting impulse for measurement or system response. The data is consumed by the operating system itself, and by software that combines and/or extends its functionality.

While it is possible to access raw sensor data, much of what is used by mobile apps is processed by the operating system into standardized formats that are then available to other applications and frameworks.

Some sensors are configured so that each time a sensor detects a change in the parameters it is measuring, a “sensor event” is reported. These events includes:

- the name of the sensor,

- the raw sensor data that triggered the event

- the timestamp

- some indication of the accuracy of the event.

Some sensors return simple data streams, while others report data arrays. With filtering or calculation processes, and with the integration of additional sources of information, sensor data can be used to interpret such diverse things as a compass bearing, or the number of “flights of stairs” represented by an afternoon hike.

So, how does all of this affect mobile apps and app development? Here is what you need to know about the sensors that help to make mobile applications so valuable and ubiquitous.

Smartphone Core Function Sensors

Microphone / Sound Sensor

The very word “telephone” is derived from the Greek words for “distant voice,” and there is no phone device at all without the conversion of sound into signals that are transmitted and received.

Microphones transform sound waves into changes in electric signals, and are used to determine decibel level, frequency, and the need for noise cancellation. These integrate with any microphone-enabled app (i.e., search engines, podcasting, and personal assistants like Siri/Google Assistant/Alexa).

Any sound that can be heard, transmitted, and received can be recorded and analyzed. Primary uses are for wireless telecommunications, whether cellular or VoIP (Voice Over Internet Protocol), audio/video recording, voice recognition, and voice searches and commands.

Specific healthcare-related applications that use this functionality include patient and team communications (telehealth), but also ear, heart, and lung health. For example, sound files can be used to analyze breathing and cough events. This could help to assess pulmonary health, and create practices for remote asthma management and lung rehabilitation.

Touch screen

Mobile apps do require active user input, and most of that is done via the touchscreen.

All manual user navigation and virtual keyboard entry relies on the capacitive touchscreen—the primary mode of user interaction on smartphones. Active-pixel image sensors each contain amplifiers that convert photo-generated charge to a voltage, amplify the signal voltage, and reduce noise. Some phones also have responsive haptic systems that provide force feedback and touch interaction.

In addition to the simple touch, capacitive devices support multi-touch control gestures such as pinch-to-zoom and swipe. What this means for mobile app development is that touchscreen user interaction affects everything from design onward, since it is the default mode of information gathering. Phone sensors alone cannot measure a person’s weight, for example. You could get a person’s current weight from a wireless smart scale, or from a photo of the manual scale output, or from a vocal response to the question, but in many cases it might be more user-friendly to provide a drop-down menu for selection, or direct the user to enter a number.

Camera / CMOS Image Sensor

Simply put, a smartphone camera image sensor receives the light that enters the camera through the lens and produces a digital image from it. The surface of a sensor contains millions of photosites (pixels) which are responsible for capturing the light. Complementary Metal-Oxide Semiconductor (CMOS) sensors are by far the most common, but the scanning capture method it uses can skew images when the sensor tries to interpret a moving object. Less popular because of power usage and cost, the Charge-Coupled Device(CCD), captures an image in one shot, converting it into one sequence of voltages.

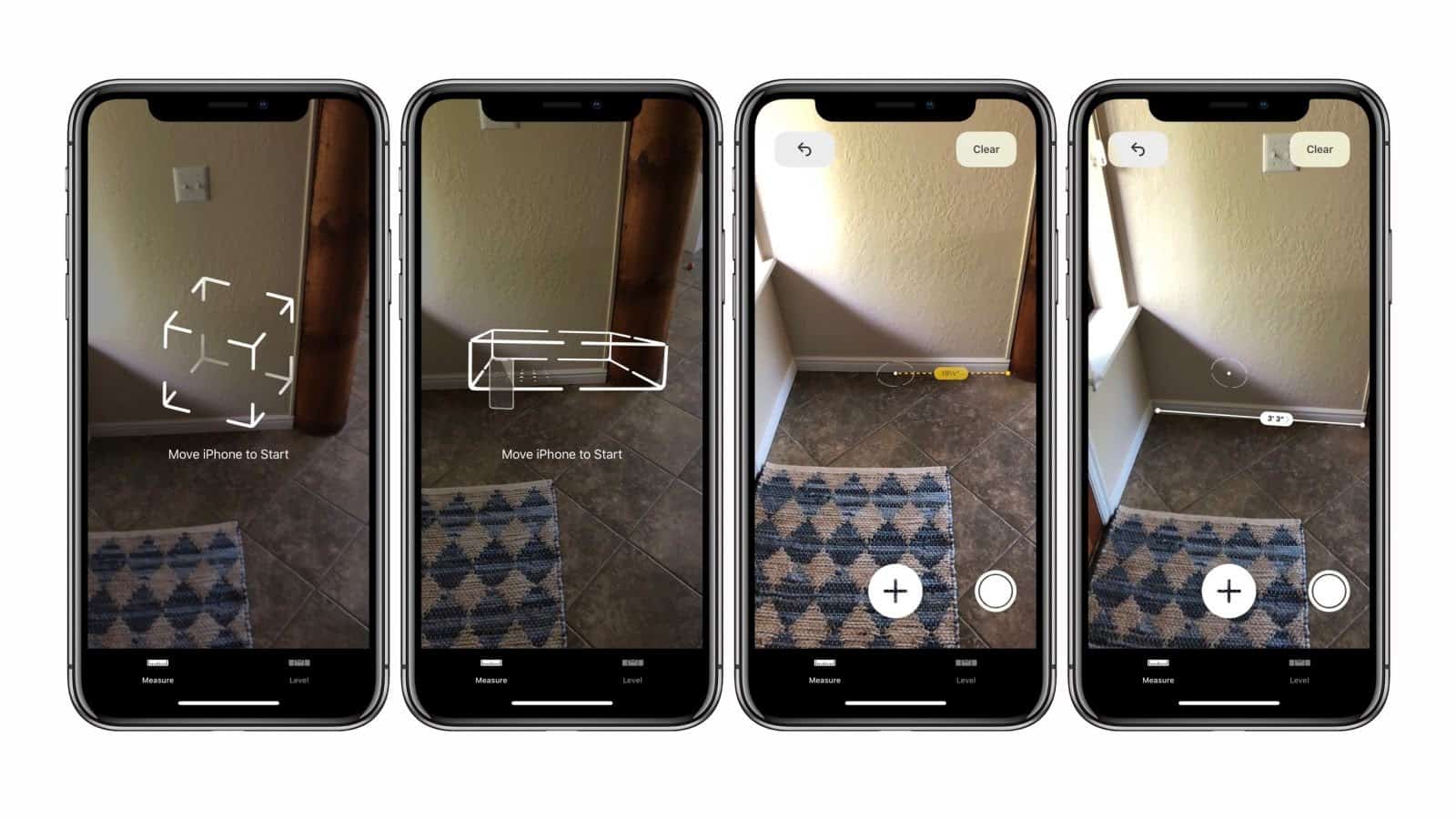

Smartphone cameras vary by factors such as sensor size, megapixels, pixel count, pixel width and height, aspect ratio, and the quality, type and number of lenses included in the photographic assemblage. Additional features are available such as image stabilization, zoom, HDR, RAW image capability, and night modes. The camera can work in tandem with the microphone to produce audio-visual files such as presentations or videos. Some cameras are combined with high-end sensor augmentation and LiDAR sensors to create a virtual reality space,

When thinking of the primary use of a smartphone camera, “selfies” and personal photos quickly come to mind. However, cameras and the images they produce can be used in a number of ways, including for identity security and optical character recognition (OCR). In combination with software and other sensors such as the internal gyroscope, the camera can be used to estimate length, angle, slope, level, height, width, distance, and – on some devices with longer-range infrared (IR) detectors – even heat signatures.

Camera and video capabilities are essential for telehealth applications like online appointments. In dermatology, classifying melanomas can be done by extracting features like contrast and variance, area, diameter and perimeter from an image. Heart rate data (HR/HRV) and arterial oxygen saturation can be interpreted from video of illuminated skin because of the fluctuation of transmissivity and/or reflectivity of light with arterial pulsation through the tissue. However, continuous monitoring has largely moved to wearable external device technology with mobile connectivity.

Partly because of the pandemic, Quick Response (QR) codes have gained in popularity for contactless in-store ordering and checkout, as well as for direct links to relevant applications and websites. Dynamic QR codes use identifiers, locators, or trackers, and do not require Near Field Communications (NFC) or Bluetooth. Used originally by Toyota to keep track of car parts during the manufacturing process. They have enormous potential for streamlining processes.

Examples of this being used in healthcare include mobile patient identification and tracking through admission, discharge, and to post-treatment instructions. Drug packaging information, medical equipment information and inventory management, and many types of process improvements can benefit from the use of QR codes.

iPhones (iOS 11+) and some Android devices can scan QR codes natively without downloading an external app. Other types of barcodes are still in use for such activities as surgical instrument identification and sterilization, specimen collection, patient wristbands, and medical supplies (Code 39, NDC, ECC200, Data Matrix, EAN -8, EAN-13, UPC -A, ITF) and can also be scanned as part of inter-operative efforts.

Biometric Identity Sensors

Many smartphones employ methods other than passcodes or other authentication methods to verify identity for phone security and financial transactions. Fingerprint readers can scan with light, capacitance, or sound waves. Facial recognition software uses the camera and/or an infrared sensor to interpret the 2d/3d geometry of your face and other facial features, like skin texture or the eye’s iris pattern.

Depending on the particular model of the phone, identity verification applications may involve additional hardware sensors or not.

Environmental Sensors

Environmental sensors monitor the ambient environmental conditions, return a single sensor value according to a standard unit of measure, and do not typically require data filtering or data processing.

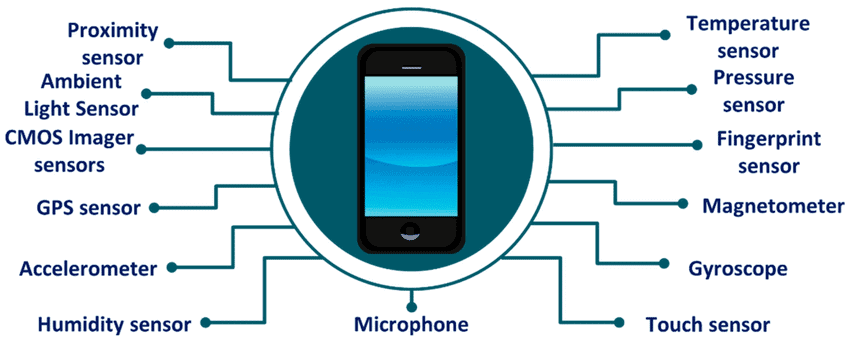

This photo shows some of the most common and widely used environmental sensors, and other sensors discussed, in mobile devices.

Here is an overview of what these sensors do and how they help consumers:

Photometer / Light Sensor

The ambient light sensor generates stronger or weaker electrical signals according to the brightness of the ambient light it senses. The phone’s software uses this data to adapt the display. Sensors on some models can also measure the independent intensity of blue, red, green and white light to fine-tune image representation.

Proximity / IR Light Sensor

The object proximity sensor is an infrared light detector that responds to reflected (invisible) infrared LED that shines out and bounces back from obstacles at a given distance. The sensor is placed near the earpiece of the phone, and alerts the system to dim and disable the screen if your face is close to it; this prevents accidental touch screen actions and saves battery life. The proximity sensor is also used to program wave gestures for targeted control of media, calls, or other apps.

Barometer / Air Pressure Sensor

The barometer measures atmospheric pressure by calculating changes in electrical resistance and capacitance. It is used to report ambient air pressure, estimate and correct altitude data, assist in more accurate GPS location, and to help calculate interpreted data such as the number of floors climbed by a pedestrian.

Thermometer / Temperature Sensor

Every smartphone has an internal temperature sensor to monitor the temperature inside the device and its battery for alerts on overheating and shutdown. However, sensors to measure the ambient temperature near the device are not available for iOS (iPhones), and are not included in enough Android devices to justify app development.

The obvious medical application is the infrared non-contact thermometer to check for fever, but the Huawei Play 4 Pro phone (sold only in China) is the only smartphone so far to include one. Several wireless thermometers on the market can be integrated with a phone to provide accurate temperature data.

Hygrometer / Humidity Sensor

The Samsung Galaxy S4 pioneered the relative humidity sensor, which expresses the ratio of the moisture in the air to the highest amount of moisture at a particular air temperature. With humidity and temperature sensors, dew point and absolute humidity can be calculated. The Samsung Health application used this combination to tell the user whether they were in their “comfort zone” of optimal air temperature and humidity. It is a rare device that currently measures external ambient humidity or temperature, though, since other devices do a better job of it.

Position and Motion Sensors

Unlike environmental sensors, position and motion sensors return multidimensional sensor values.

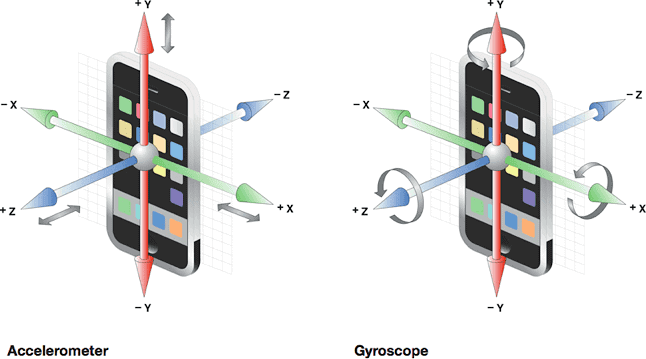

Each event returns data from each sensor for each of the three XYZ coordinate axes (depth, width and height). The sensors’ coordinate system does not rotate, since it is physically tied to the phone, but the screen orientation or activity’s coordinate system does. If an app uses sensor data to position views or other elements on the screen, the incoming sensor data must be transformed to match the default orientation and the rotation of the device.

Magnetometer

The geomagnetic field sensor measures the magnitude and direction of the magnetic fields along the XYZ axes. It works by detecting changes in the electrical resistance of the anisotropic magneto-resistive materials inside it, and varying its voltage output in response. This helps to establish the XYZ axes for other sensors and inform applications of the phone’s orientation to the earth’s North Pole. The magnetometer enables standalone compass apps, and it operates in tandem with the data from the accelerometer and GPS unit to calculate where you are and which way the phone is pointing.

Compass mode in Google or Apple Maps uses the magnetometer data to calculate which way should be up on the map display. At short range, the magnetometer will also detect any magnetic metal, and different methods for using this sensor are utilized in metal detector apps for iOS and Android.

Gyroscopes and accelerometers work together to sense the orientation, tilt and rotation of the device and how much it accelerates along a linear path.

Accelerometers / Gravitational Sensors

The accelerometer is an electromechanical device that measures the force of acceleration applied to the device relative to freefall along the X, Y, and Z orientation axes. Gravity looks like acceleration to these sensors. The accelerometer measures the acceleration and deceleration, responding to whether the device is speeding up, slowing down, or changing directions.

Filters and software algorithms are normally applied to the raw data to reduce noise and to eliminate (or identify) gravitational forces. Orientation of the phone along its three axes is calculated with the accelerometers in combination with the magnetometer to determine if the device is in portrait or landscape orientation, or if its screen is facing upwards or not. It can also be used with the camera to measure angles and to level objects, to power interpreted data to use as alternate speedometers and pedometers, and even to report the “shake” gesture to the system for a programmed response.

Gyrometer / Gyroscopes

Gyroscopes measure the rate of rotation in and around each of the three XYZ axes. The Micro-Electro Mechanical Systems (MEMS) gyroscopes in smartphones do not require wheels and gimbals; they carry integrated chips that generate current via the vibration of the gyro resisting angular displacement. Gyro sensors are usually integrated architecturally with the accelerometers along the XYZ axes to simultaneously determine positions, motion tracks, and accelerations on location and rotation axes for better angular declination/inclination and velocity integration. We can thank this sensor for smooth tilt and turn gesture-controls, camera app rotational measurements and stabilization, and real-time directional mapping to constellations.

What does all this mean?

With sensor capabilities alone, users can interact with apps, self-report textual data, capture images and sounds, and track certain kinds of positionality and movement.

Not all sensors are inbuilt hardware. Many mobile apps add specialized calculations to create additional virtual sensors with some combination of the data from the accelerometers, gyroscopes, or magnetometer. The position of the phone itself is calculated by combining data from the accelerometer with either the gyroscope or the magnetometer. Gravity sensors can be dedicated hardware, for example, but can also be calculated by combining data from the motion sensors and filtering out acceleration due to device movement.

With minimal processing and interpretation, the linear and angular movements of the body in motion, for example human gait events (like falls), can be classified and interpreted, with notifications on significant deviations from habitual routines. Add integration with mapping services, and you can determine longitude and latitude, with the potential to record both positionality and movement in terms of the real world.

Conclusion

An effective mobile app can utilize all the sensor data from within the smartphone itself to further its specific healthcare purpose, but the rapid expansion of both external devices and systems of devices greatly expand the range of possible applications.

Knowing about the sensors available in smartphones and how to integrate them into your app can bring an elevated experience and richer data to both you and your users.

If you enjoyed this piece, stay tuned! The next post in this series will look at the extension of capabilities instantiated by external sensors and the Internet of Medical Things (IoMT).

Make sure you are subscribed to our blog to receive a notification when we roll out the rest of the posts in this series and more content on mobile trends.